14. Apply Bayes Rule with Additional Conditions

Apply Bayes Rule With Additional Conditions

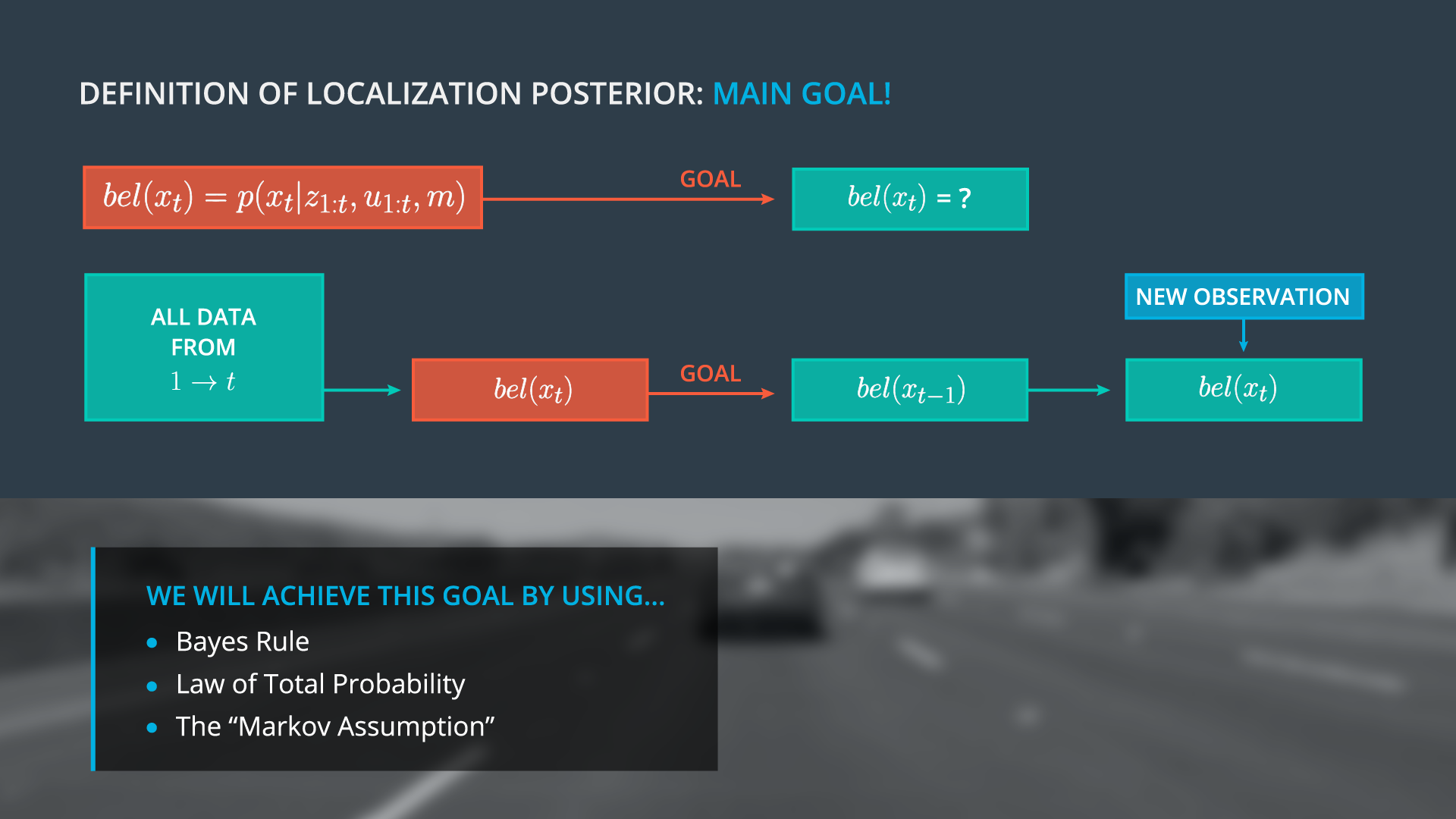

We aim to estimate state beliefs bel(x_t) without the need to carry our entire observation history. We will accomplish this by manipulating our posterior p(x_t|z_{1:t-1},\mu_{1:t},m) , obtaining a recursive state estimator. For this to work, we must demonstrate that our current belief bel(x_t) can be expressed by the belief one step earlier bel(x_{t-1}) , then use new data to update only the current belief. This recursive filter is known as the Bayes Localization filter or Markov Localization, and enables us to avoid carrying historical observation and motion data. We will achieve this recursive state estimator using Bayes Rule, the Law of Total Probability, and the Markov Assumption.

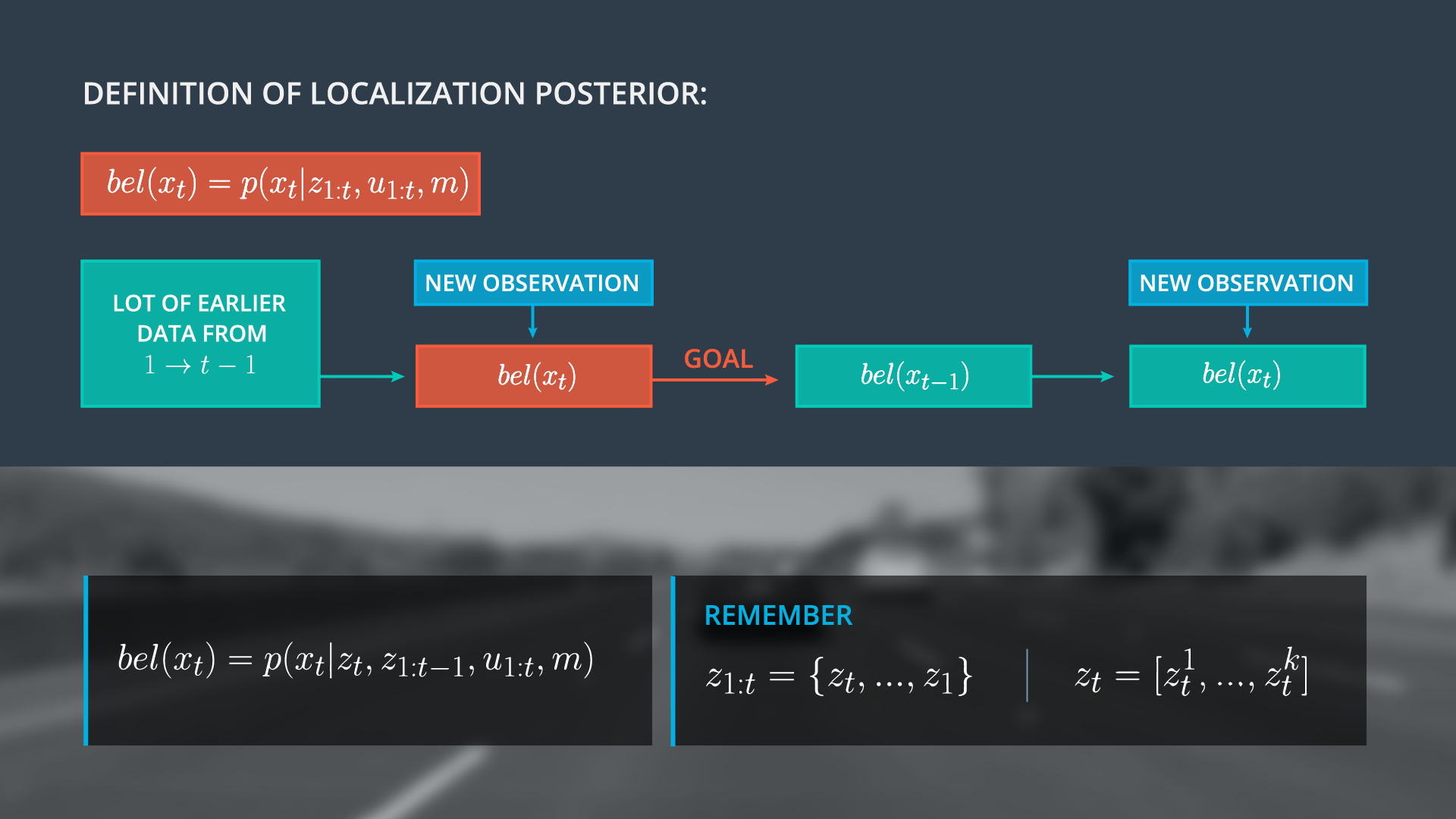

We take the first step towards our recursive structure by splitting our observation vector z_{1:t} into current observations z_t and previous information z_{1:t-1} . The posterior can then be rewritten as p(x_t|z_t,z_{1:t-1},u_{1:t}, m) .

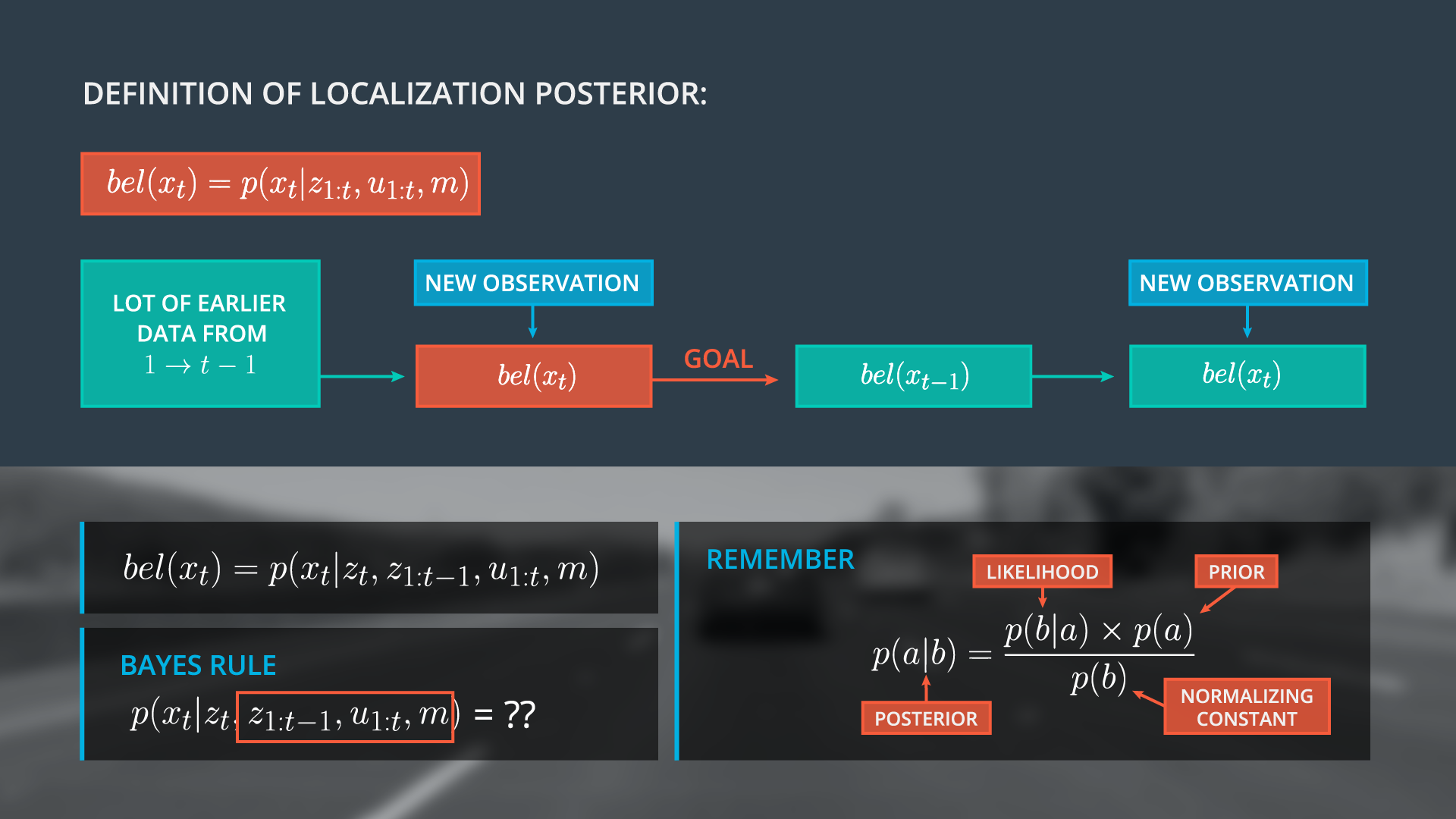

Now, we apply Bayes' rule, with an additional challenge, the presence of multiple distributions on the right side (likelihood, prior, normalizing constant). How can we best handle multiple conditions within Bayes Rule? As a hint, we can use substitution, where x_t is a, and the observation vector at time t, is b. Don’t forget to include u and m as well.

Bayes Rule

Quiz

Please apply Bayes Rule to determine the right side of Bayes rule, where the posterior, P(a|b) , is p(x_t|z_t,z_{1:t-1},u_{1:t},m)

(A)

(B)

(C)

Apply Bayes Rules with Additional Conditions